In recent weeks there has been much talk and debate about the development and use of ChatGPT. For those who are not aware, ChatGPT is an AI chatbot developed by Silicon Valley start-up OpenAI that can provide ‘human-like answers’ to complex questions. We’ve had several recent conversations in the office about chatbots, ChatGPT, Artificial Intelligence (AI), their uses and broader implications, including a healthy debate with our CEO, Floyd Woodrow. Luckily, we have our in-house authority on the subject, our Director of Cyber Security and Electronic Counter Measures, Gurpreet Singh Thathy, who studied AI at university, holds a BSc in the subject and continues to have a keen interest in all things AI.

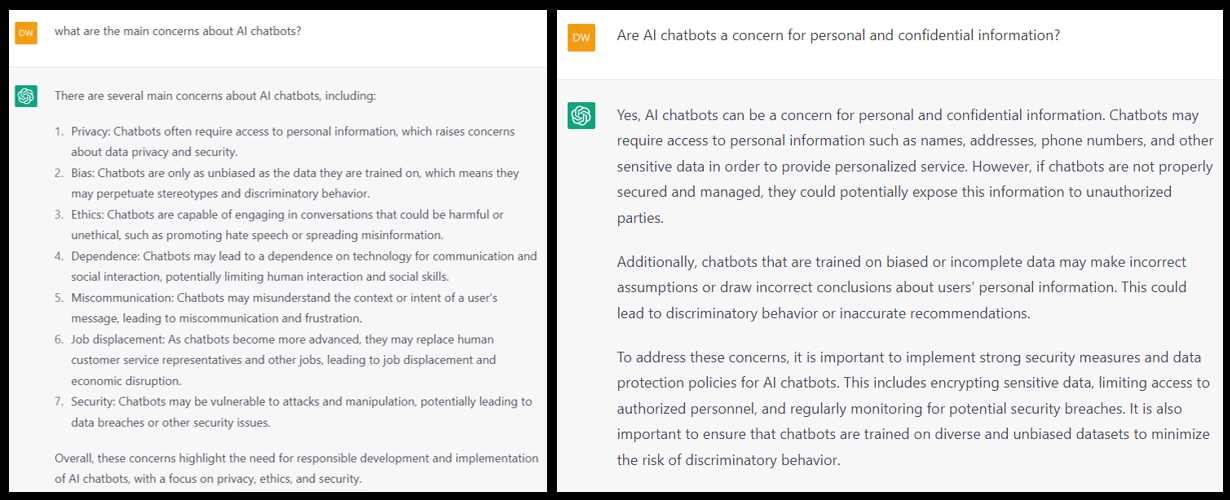

Keeping it simple, Gurpreet explained how technology within the AI field has accelerated considerably in recent years. However, he also flagged concerns over how information shared with chatbots like ChatbotGPT might be used to fine-tune their algorithms or potentially accessed by outsourced workers paid to check its answers. He also explained that all input from users has to be stored and reviewed by humans to ensure the accuracy of the AI robot is valid. As with humans, Chatbots sometimes find it difficult to separate facts from misinformation.

There are also privacy concerns, as the OpenAI website states, “review conversations to improve our systems” and that conversations will be “reviewed by our AI trainers to improve our systems.” It also advises users not to share “sensitive information in conversations”. When discussed in the office, an interesting scenario came up. “What about if you work in a regulated industry and decide to ask the chatbot questions”? You could inadvertently share confidential info with the chatbot.

The debate and concerns over the use of chatbots will continue, and like all evolutions in technology, they can assist us in our everyday lives. However, like all technology, we must all be aware of the risks, you only have to ask the chatbot what the main concerns are, and it lists seven excellent answers. The chatbot also admits it’s a potential personal/confidential information concern. Valkyrie is keen to advocate technology, BUT as with previous technology advancements, we must all be aware of the potential risks involved, especially regarding personal and confidential data.